Data is the most important asset for many businesses in the modern economy, and the rapid evolution of Generative AI (GenAI) in the form of large language models (LLMs) is unlocking unprecedented opportunities to extract value from that data. By augmenting a public LLM with in-house information, companies can potentially reap a bottom-line-boosting mix of enhanced creative content, personalized customer experiences, improved analysis, and breakthrough advances in product development and automation. All those benefits and more may be possible with successful in-house chatbot implementations, but LLM model training typically requires significant hardware resources. To get the most out of any new AI-related hardware investment, it’s important to know which component upgrades can positively impact AI performance.

Setting up a multi-node server cluster to distribute training workloads across multiple GPUs can be an important part of implementing an in-house LLM. In such an environment, low latency and high bandwidth between GPUs are important, so the decision to choose one type of network interface card (NIC) over another can have a direct impact on how well the solution performs.

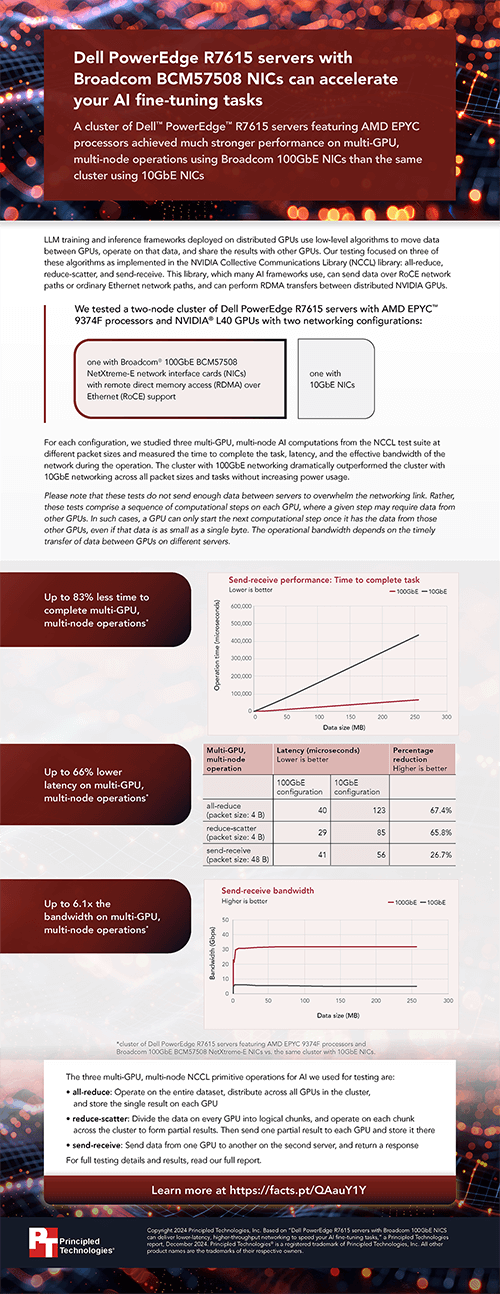

To see how different NICs might affect AI tuning, we tested the performance of a two-node Dell cluster with two different networking configurations: one with Broadcom 100GbE BCM57508 NetXtreme-E NICs and the other with Broadcom 10GbE BCM57414 NICs. The cluster consisted of two Dell PowerEdge R7615 servers with AMD EPYC 9374F processors and NVIDIA L40 GPUs.

For each configuration, we studied three AI computations at packet sizes ranging from 4 B to 256 MB and measured the time it took to complete each operation and the network’s effective bandwidth during that operation. In every scenario, the cluster with 100GbE networking dramatically outperformed the cluster with 10GbE networking. Compared to the 10GbE networking configuration, the decrease in latency with the 100GbE NIC ranged from 26 percent to 67 percent, and the operational bandwidth was 3.7 to 6.1 times as high. In addition, the 100GbE cluster achieved these tremendous gains without increasing power usage.

To dig into the details of our AI-oriented multi-node, multi-GPU network configuration performance comparison tests—and read more about how they could benefit you—check out the summary, report, and infographic below.

Principled Technologies is more than a name: Those two words power all we do. Our principles are our north star, determining the way we work with you, treat our staff, and run our business. And in every area, technologies drive our business, inspire us to innovate, and remind us that new approaches are always possible.